Automation in SEO: When to use it, and when to think twice

Over the last 3 years, the rise of AI has accelerated the amount of work we as SEOs and marketers can automate, as well as making marketing automation much more accessible and affordable than it ever has been.

This is a largely positive movement. We don’t have to use our brain for menial tasks anymore and can instead focus our cognitive load on the work that needs our expert, human-led insights.

However, we must also recognise automation as a double-edged sword. Whilst it’s tempting to automate as much as we can, we must be cautious and understand that AI isn’t “Intelligent” in the same way that a human is, and we can’t sacrifice quality over convenience. Automation should enhance human intelligence, not replace it.

Why you shouldn’t let automation do all the thinking

Your brain is a muscle, and if you don’t exercise your critical thinking it will atrophy. The result of this are dulled problem-solving skills and an inability to adapt to unusual circumstances or nuances, ultimately leading you to be a less effective marketer.

There are vast complexities across the Digital Marketing landscape (including SEO) that automation tools and AI are ineffective at picking up on. Your lived experience is your value here, so don’t be afraid to step away from the tools and think laterally to solve problems on your own steam.

If you’d like a read, MIT have just released a study into the impact that using LLMs can have on your brain, and that an overreliance on LLMs like ChatGPT may weaken problem-solving, memory, and learning, especially for younger or developing brains.

Smart Automation in Google Sheets

IMPORTXML for Meta Data Audits

=IMPORTXML is a beautifully simple formula that allows you to pull on-page data from any URL, directly into a Google Sheet.

The formula achieves this by using something called xPath, which is a syntax for defining parts of an XML document (like a web page). By doing this, you can tell the formula exactly what parts of the page you wish to extract into your document.

A great use case for this is pulling Page Titles & H1s for a batch of URLs. For example, if your URL is in cell A5, you can use the below formula to scrape and import the Page Title from that URL:

=IFERROR(INDEX(IMPORTXML(A5, “//title”), 1),”Missing Data”)

I even chucked in a cheeky IFERROR for neatness’ sake.

This is much quicker than crawling the website, exporting the data and reimporting it into your sheet, and can save your cognitive load considerably.

GPT for Sheets = Automated Commentary

GPT for Sheets, Slides, Docs & Sheets is a nifty little Google Sheets extension that utilises the GPT Workspace to bring LLM capabilities directly into your document. You can download the extension by opening your Google Sheet and navigating to Extensions > Add-ons > Get add-ons and installing from there.

Once activated, you can simply create a =GPT(“”) formula and input your prompt within the quotation marks to generate a response from the GPT Workspace. I.e.

=GPT(“Based on the data in cells “ & B2:D2 & “, write a short analysis of SEO performance and suggest one improvement.”)

This will automatically pull your Sheets’ data into the prompt, allowing you to produce analysis en masse without needing to make specific changes to data references within the prompt.

However, you must check the output each time and not trust it completely. If your outputs aren’t making sense, refine your prompt and go again.

Scraping Reddit for Untapped Content Ideas

Reddit is a goldmine for spotting questions, frustrations, and trends before they hit mainstream search demand. If someone can’t find an answer on Google, Reddit is often the next stop. That makes it a powerful platform for identifying content gaps and emerging topics.

Why Reddit is a goldmine for pre-demand content discovery

Reddit users speak in natural language, ask unfiltered questions, and often go deep into niche conversations. This gives you direct access to how real people think and talk about topics in your industry. This is perfect for content ideation, FAQs, or supporting articles for a pillar cluster.

Intro to Reddit’s API & using Python or Google Colab

To scrape Reddit posts programmatically, you can use the Reddit API. Combine it with Python or Google Colab and you’ve got a lightweight, automated way to extract post titles, upvotes, comments, and more from any subreddit.

Tools like praw (Python Reddit API Wrapper) make this easier to manage even for beginners. Here’s a simple outline:

- Authenticate via Reddit’s API

- Target relevant subreddits

- Extract post titles or threads that mention your keywords

- Export as a CSV for further analysis

Example: Exporting CSV > Feeding into LLM for Pillar Ideation

Once you’ve pulled a list of common Reddit questions or complaints, you can feed those into a GPT model to cluster themes or propose pillar/cluster content ideas. This saves huge amounts of time compared to manual content brainstorming.

Pro tip: Always check the rules of each subreddit before scraping to avoid getting your bot banned.

Integrating with Screaming Frog

The old reliable Screaming Frog crawler has evolved way beyond basic audits. Recent updates have introduced powerful integrations that make technical and content SEO more connected than ever.

Embedding-Powered Semantic Analysis (v22+)

Screaming Frog now supports integration with LLMs and word embeddings, meaning you can assess semantic similarity between pages. Use cases include:

- Identifying content cannibalisation

- Supporting topic clustering efforts

- Pinpointing pages with overlapping intent for consolidation

This feature helps move your audits from purely technical to content-aware in seconds.

GA4 + GSC Integrations for Crawl-Time Insights

With built-in support for GA4 and GSC APIs, you can pull performance data into Screaming Frog during your crawl. No more exporting and VLOOKUPs!?

This means you can:

- See page traffic and engagement metrics right next to crawl depth and indexability

- Prioritise fixes based on performance

- Combine UX and SEO data in one spreadsheet

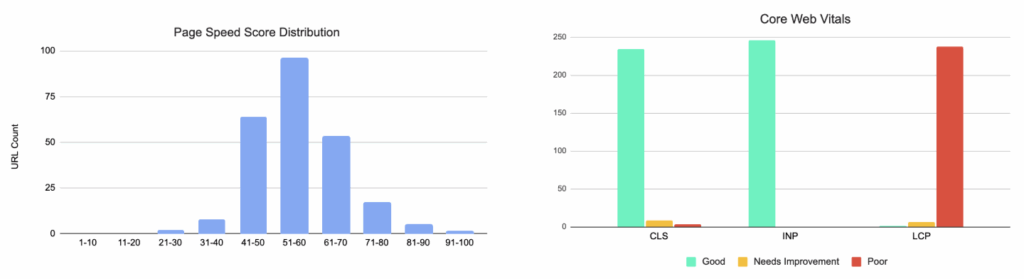

Pulling Core Web Vitals with the PSI API

With a free PageSpeed Insights API key, you can pull Core Web Vitals scores directly into your crawl. This enables CWV analysis at scale. This is ideal for flagging performance bottlenecks by page template or section.

You can even visualise this in Google Sheets using your own visual graphs. This is great for turning technical data into client-ready visuals.

Finding Historic URLs via Archive.org

Lost access to legacy site structures? Archive.org’s Wayback Machine is your friend.

Using IMPORTXML or Archive.org’s API, you can extract historic internal links from archived versions of your site. For example:

=IMPORTXML(“https://web.archive.org/web/*/https://example.com/*”, “//a/@href”)

This lets you:

- Rebuild lost pages

- Reclaim valuable backlinks

- Identify orphaned or deleted content

Combine this with Screaming Frog and redirect mapping to recover equity and restore SEO visibility after a site rebuild.

When Not to Automate SEO Tasks

Content Writing

While AI content tools can help with outlines, summaries, or repurposing, they still fall short on depth, originality, and credibility. Over-reliance on them risks:

- Poor E-E-A-T signals

- Repetitive, low-value writing

- Losing brand voice and nuance

Use AI for brainstorming and formatting, but keep humans at the helm for long-form content, tone, and fact-checking.

Internal Linking

Tools like Ahrefs or Surfer can flag linking opportunities, but they often miss context. You might end up linking a blog post to an irrelevant service page, or stacking too many links into the same anchor text.

Instead:

- Use automation as a guide, not a rule

- Layer human review for accuracy and logic

- Prioritise internal links that add value and context

When Automation Shines Brightest

Reporting & GLS Automation

Reporting is where automation becomes a no-brainer. Set up a Looker Studio (formerly Data Studio) dashboard, connect your data sources, and automate:

- Weekly/Monthly SEO summaries

- GSC trend tracking by query/category

- Auto-annotated changes (e.g. algorithm updates or site changes)

Bonus: Use GPT for Slides, Docs & Sheets to summarise trends or suggest improvements directly in your reporting layer. You’ll save hours and reduce human error.

Automation as a Crutch, Not a Replacement

So, where does that leave us?

Automation in SEO is incredible when used strategically. It can cut down on repetitive tasks, free up cognitive load, and accelerate insight generation. But it can’t replace your expertise, intuition, or experience.

The best approach? Let automation do the heavy lifting, and keep humans steering the ship.

Got a question about AI, automation or general digital marketing practices in an ever-evolving landscape? Get in touch today!

The post Automation in SEO: When to use it, and when to think twice appeared first on Hallam.