On-device LLMs: The Disruptive Shift in AI Deployment

Large Language Models (LLMs) have reshaped how we interact with technology, powering everything from smart search to AI writing assistants. But until now, their power has mostly lived in the cloud.

That’s starting to change.

On-device LLMs are bringing this intelligence directly onto your phone, laptop, or wearable, enabling real-time language understanding, generation, and decision-making without needing to ping a server constantly.

Imagine your device summarizing documents, translating conversations, or running a personal AI assistant; even when you’re offline. This shift unlocks a future that’s faster, more private, and always available.

In this blog, we explore what makes on-device LLMs a game-changer and why businesses should now pay attention.

What Are On-Device LLMs?

On-device LLMs are compact, optimized versions of large language models that run directly on hardware like smartphones, laptops, or wearables, without relying on the cloud.

Unlike traditional models that process data remotely, these run locally. That means your device can understand and respond to text, voice, or context instantly, while keeping everything private and offline.

They are designed for tasks like summarizing notes, managing to-dos, or offering AI assistance, all without sending your data anywhere else.

This shift brings powerful benefits:

- Privacy – your data stays with you

- Speed – no network = no lag

- Offline use – works even without internet

Where Are They Used?

You have likely already used on-device LLMs without realizing it. Examples include:

- Smart autocomplete and text suggestions

- Voice assistants with offline understanding

- Mobile apps with real-time summarization or translation

- AI features in secure enterprise environments

If you are interested in learning about diffusion LLMs, you can read our blog!

Why On-Device LLMs Matter?

On-device large language models are more than just a tech novelty. They represent a fundamental shift in how AI serves users, unlocking powerful benefits that directly impact privacy, speed, accessibility, and cost. Running LLMs on-device brings several important benefits:

1. Enhanced Privacy by Design

With on-device processing, sensitive data stays on your device, never sent to external servers. This is crucial for protecting personal information in sectors like healthcare, finance, and everyday consumer apps. For example, voice dictation or message transcription happens locally, keeping conversations truly private.

2. Reduced Latency

Because the AI runs directly on your device, responses come almost instantly; no waiting for cloud servers. This makes a noticeable difference in real-time interactions, such as seamless language translation or voice commands.

3. Offline Capability

On-device models work without internet access, enabling AI-powered features anywhere, from remote travel spots to offline fieldwork, where connectivity is limited or unreliable.

4. Cost and Energy Efficiency

Processing locally reduces reliance on costly cloud infrastructure and lowers data transmission, saving money for both providers and users. Plus, optimized on-device AI chips can extend battery life, making devices more energy-efficient.

In short, on-device LLMs are set to make AI smarter, faster, and more private, right where we need it most: in our own hands.

How Do On-Device LLMs Work?

On-device LLMs run AI models directly on your device, using the local CPU, GPU, or specialized AI chips, without sending your data to the cloud. Here’s how they typically operate:

1. Model Storage

Compressed AI models are securely stored on your device’s storage (like SSD or flash memory), ready to be accessed whenever needed.

2. Local Processing

AI computations happen on-device using hardware optimized for AI tasks, from versatile CPUs and powerful GPUs to energy-efficient NPUs and custom chips. So, delivering fast and efficient inference.

3. Software and Model Optimization

Advanced software frameworks (like TensorFlow Lite or Core ML) work alongside techniques such as quantization, pruning, and knowledge distillation to shrink model size and speed up processing without sacrificing accuracy.

4. Hybrid Approach

For especially complex tasks, some systems can combine local AI with optional cloud assistance, giving users flexibility and performance without sacrificing privacy.

5. Local Personalization

On-device models can adapt and learn from your behavior during idle times. Thus, updating and improving themselves while keeping your data private and local.

The good part is, it is already making its way in the market, let’s check!

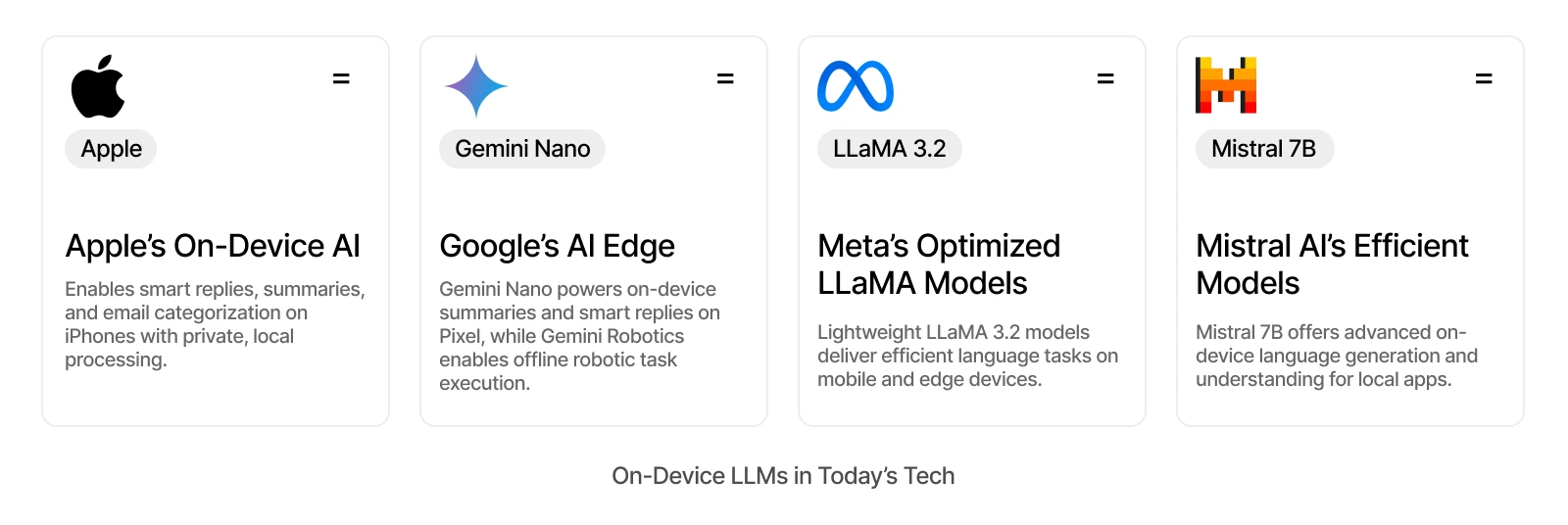

Leading the Charge: On-Device LLMs in Today’s Tech

1. Apple’s On-Device AI

Apple has introduced a suite of on-device AI models designed to run efficiently on iPhones using the Neural Engine. These models enable features like AI-powered email categorization, smart replies, and summaries, all while ensuring user data remains private and processing is done locally on the device.

2. Google’s AI Edge

Google’s Gemini Nano model powers on-device generative AI features on the Pixel 8 Pro. Leveraging the Tensor G3 chip, it supports functionalities like summarizing recordings in the Recorder app and providing smart replies in Gboard, all without requiring an internet connection

Beyond smartphones, Google’s Gemini Robotics model pushes the boundaries of on-device AI by enabling robots to perform complex physical tasks entirely offline. This technology powers robots capable of folding laundry, packing items, and even executing athletic moves, all without needing cloud connectivity. This clearly showcases the expanding potential of on-device LLMs in robotics and edge computing.

3. Meta’s Optimized LLaMA Models

Meta’s LLaMA 3.2 models, particularly the 1B and 3B versions, are optimized for mobile and edge devices. These lightweight models utilize techniques like pruning and knowledge distillation to ensure efficient performance on devices with limited computational resources.

4. Mistral AI’s Efficient Models

Mistral 7B is a fine-tuned large language model optimized for mobile deployment. With 7.3 billion parameters, it delivers state-of-the-art language understanding and generation tasks, making it suitable for on-device applications requiring substantial processing capabilities.

On-Device LLMs: What You Need to Know About Its Limits

On-device LLMs offer incredible opportunities but come with certain limitations. Unlike cloud-based models, which can be enormous and resource-intensive, on-device models need to be compact enough to run efficiently on limited hardware. This often means trading off some size and, consequently, model accuracy and contextual understanding.

1. Model Size & Accuracy

To run smoothly on limited hardware, models must be smaller and optimized. This means they might not yet match the broad knowledge or deep reasoning of large cloud-based models.

2. Limited Context Windows

Memory constraints on devices restrict how much text these models can handle at once, which can impact performance in complex conversations or tasks.

3. Computational & Battery Constraints

Edge devices naturally have less processing power and memory than cloud servers. Running AI locally can also impact battery life if not carefully managed.

4. Hardware Dependencies

Not every device is currently built to support on-device AI fully, but advances in specialized chips are closing this gap fast.

To address these challenges, researchers and engineers focus on balancing model quality and size through optimization. Two main approaches have emerged:

- Reducing Parameters: Studies show that it’s possible to maintain or even improve performance while decreasing the number of parameters. This involves designing models that make more efficient use of fewer parameters without significant loss in capability.

- Boosting Efficiency: Techniques like knowledge distillation, where a smaller model learns to mimic a larger one, and architectural innovations help smaller models punch above their weight.

These strategies, combined with ongoing hardware advances, are paving the way for increasingly powerful on-device AI. Thus, helping to deliver smarter, faster, and more private experiences.

At Markovate, we expertly balance these trade-offs to build on-device AI solutions that are powerful, efficient, and practical. Let’s check!

How Can Markovate Help You Harness On-Device AI?

At Markovate, our focus is on building end-to-end AI solutions that are practical, reliable, and optimized for on-device use. Our expertise spans everything from foundational model design to deployment and optimization, helping businesses bring powerful AI directly to users’ devices.

We specialize in overcoming the unique challenges of on-device AI, such as privacy concerns and energy constraints. By combining techniques like model compression, hardware acceleration, and efficient architectures, we deliver AI solutions that are fast, reliable, and privacy-focused.

A prime example of our work is SmartEats, an on-device food recognition app that enables users to scan, identify, and log meals in real time without typing. Using neural networks trained on over 1.5 million foods, SmartEats estimates portion sizes, tracks nutrients, and suggests healthy alternatives – all while ensuring user data stays private by processing everything locally on the device.

The Impact We Deliver

- Faster, low-latency user experiences without constant cloud dependency

- Enhanced privacy by keeping data processing on-device

- Scalable AI that adapts across mobile, IoT, and edge environments

- Reduced operational costs through efficient AI deployment

Whether you are launching your first on-device AI feature or scaling advanced AI across devices, Markovate partners with you to turn ideas into real-world, production-ready solutions that users like.

Sum Up: The Road Ahead for On-Device LLMs

On-device large language models are no longer a distant innovation; they are shaping the present and defining the future of AI. With growing support from tech giants like Apple and Google, and adoption by platforms like Kahoot, we are entering an era where AI is not just smarter – it’s local, faster, and more private.

But we’re only scratching the surface. The next wave of innovation will center on lighter, more efficient models, deeper personalization, hybrid edge-cloud workflows, and multimodal capabilities, all optimized for real-world, mobile-first environments.

As models get smaller and hardware gets smarter, expect to see LLMs baked into everyday tools, working quietly in the background, right from your pocket.

So, are you looking to bring your on-device AI vision to life?

Reach out to our mobile AI experts today.

FAQs

1. How are on-device LLMs different from cloud-based LLMs?

On-device LLMs process data locally, offering lower latency, enhanced privacy, and offline capabilities. Whereas cloud-based models require internet access and centralized processing.

2. What are common use cases for on-device LLMs?

Some popular applications include smart assistants, real-time translation, personalized recommendations, and private AI writing tools.

3. Can you run an LLM on a phone?

Yes, you can. With tools like the LLM Inference API, it’s now possible to run large language models directly on Android phones. This means your phone can do things like write text, answer questions, and summarize documents, all without needing to connect to the internet.

4. How do we overcome the challenges of running large language models on devices?

We overcome these challenges by making the models smaller and faster using techniques like quantization and pruning. Devices also use special hardware, such as neural processing units (NPUs), to speed up AI tasks. Additionally, many models are designed to be lightweight and efficient, specifically for phones and other devices. For really difficult tasks, the device can send work to the cloud while handling simpler tasks locally. These solutions help LLMs run smoothly on devices.