AI Security: Threats, Frameworks, Best Practices & More

As AI becomes deeply embedded in enterprise operations, it brings not just innovation but also a new class of security threats. Unlike traditional software, AI systems are dynamic, data-driven, and often operate in unpredictable environments. This makes them vulnerable to novel attack vectors like prompt injection, model manipulation, data poisoning, and more.

AI security refers to protecting these AI systems from hacking, misuse, or data breaches. It also includes using AI itself to strengthen cybersecurity, like spotting threats faster and responding in real time. The goal is to keep AI systems safe, reliable, and trustworthy.

The recent discovery of “EchoLeak,” a zero-click vulnerability in Microsoft 365 Copilot, underscores just how real these risks are.

With growing reliance on AI agents and third-party models, traditional security methods fall short. What’s needed is an AI-native approach – one that embeds protection throughout the entire lifecycle, from development to deployment.

In this blog, we explore today’s growing AI threat landscape, key vulnerabilities, and the frameworks and best practices enterprises need to secure their AI systems and protect business-critical data. Let’s dig deeper!

AI Threat Landscape: What’s Behind AI Security Risks?

As AI systems scale across industries, they introduce a range of security vulnerabilities; some familiar, others entirely new. Understanding these risks is key to defending against them. Below, we break down the major categories shaping today’s AI threat landscape:

1. Adversarial Threats: Intelligent Manipulation

Unlike traditional security risks, adversarial threats against AI systems are often subtle and model-specific. Key types include:

- Data Poisoning: Attackers inject misleading or malicious data into training sets, compromising the model’s behavior before it’s even deployed.

- Adversarial Inputs: Seemingly minor alterations, like a few pixels in an image, can deceive AI models. These tweaks may cause the system to misidentify faces or approve fraudulent transactions.

- Prompt Injections: Attackers can embed malicious content into inputs. This can trick large language models into giving false outputs or leaking confidential information.

2. Privacy and Model Exploitation Risks

AI models often train on sensitive datasets. If not properly safeguarded, attackers can exploit this:

- Model Inversion: By analyzing a model’s outputs, adversaries may reconstruct private training data, thus exposing sensitive or personal information.

- Model Theft: Reverse engineering can uncover proprietary models or leak intellectual property, which threatens business confidentiality.

3. Operational Failures: Weaknesses from Within

Many AI security issues stem not from external actors but from internal flaws:

- Misconfigurations: Simple errors, such as exposed endpoints or missing access controls, can open doors to attackers.

- Bias and Reliability Issues: Models trained on flawed or biased datasets can lead to unfair, unpredictable, or even unsafe outcomes.

- Rushed Deployments: In the race to innovate, some teams skip security vetting. The result? Vulnerabilities that surface only after real-world deployment.

4. The AI Supply Chain: A Hidden Risk Vector

From open-source models to third-party APIs, modern AI systems rarely exist in isolation. Vulnerabilities in libraries, pre-trained models, or data sources can act as backdoors, thus compromising entire systems without direct access to the model itself.

Common Vulnerabilities in AI Systems

Beyond broad threat categories, AI systems contain specific weaknesses at the data, model, and infrastructure levels. These vulnerabilities, whether caused by malicious attackers or internal flaws, can lead to compromised performance, privacy breaches, or full system failure. Below are some of the most critical risks organizations must address.

1. Data Poisoning and Training Data Manipulation

When attackers inject malicious or misleading data into training datasets, they can corrupt the learning process. This may lead to biased, inaccurate, or even dangerous model behavior. Without strong data validation and provenance tracking, AI systems are especially vulnerable at this early stage.

2. Lack of Data Integrity Controls

AI systems rely heavily on data consistency and accuracy throughout their lifecycle. Any unauthorized or accidental modification of data, in storage, transit, or processing, can degrade performance or cause the system to behave unpredictably.

3. Model Exploitation Vulnerabilities

AI models can be exploited through various means:

- Slight alterations to inputs can mislead models.

- Attackers can reconstruct sensitive training data from outputs.

- By extensively querying a model, attackers can replicate its behavior or steal intellectual property.

These vulnerabilities arise when models are overly exposed or insufficiently hardened against probing.

4. Bias and Discrimination

Bias is not just an ethical issue – it’s a systemic vulnerability. Models trained on skewed data can produce unfair or discriminatory outcomes, which may expose organizations to legal, regulatory, and reputational risks. Without fairness testing and continuous auditing, biased decisions can go undetected in production.

5. Inadequate Privacy Safeguards

When AI systems process sensitive user data, weak access controls or insufficient anonymization can lead to privacy breaches. These are often the result of insecure data handling practices, lack of encryption, or poor implementation of consent mechanisms.

6. Infrastructure Misconfigurations

AI systems typically run on complex cloud or hybrid infrastructures. Misconfigurations – such as exposed endpoints, overly permissive access controls, or insecure APIs – can act as entry points for attackers to compromise the system or access underlying data and models.

7. Algorithmic Fragility

Many AI models are not robust against unexpected or noisy inputs. Without proper adversarial training or input validation, even small perturbations can cause models to fail or behave erratically – a vulnerability often exploited in adversarial attacks.

8. Resource Exhaustion Susceptibility

AI systems, especially in production environments, can be vulnerable to resource-based attacks like denial-of-service (DoS). If rate-limiting or load-balancing mechanisms aren’t in place, malicious actors can overwhelm computing resources and disrupt service availability.

9. Weak Operational Monitoring

A lack of runtime monitoring, alerting, and auditing leaves AI systems blind to failures, attacks, or misuse. This limits the ability to detect issues early and respond before they escalate into more serious incidents.

These vulnerabilities highlight the importance of securing every layer of an AI system, not just the algorithm. Building secure AI requires a combination of good data hygiene, strong infrastructure controls, model hardening, and ongoing oversight. Let’s further check some frameworks to secure AI systems.

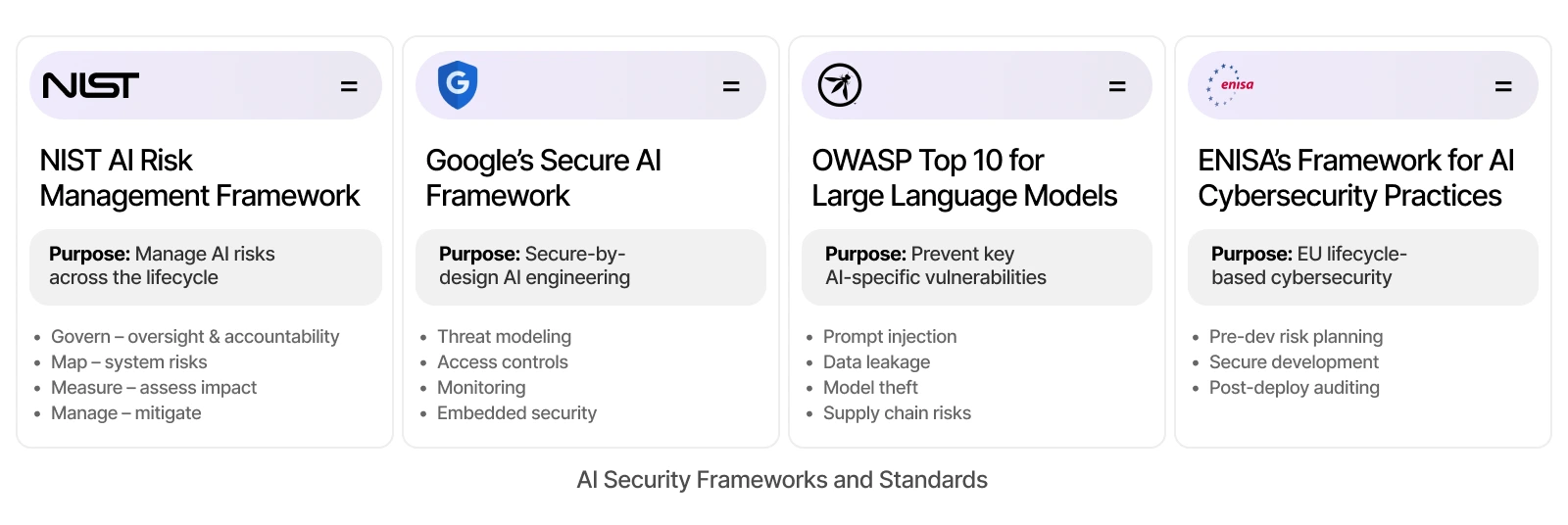

AI Security Frameworks and Standards

Securing AI systems requires more than reactive defense – it demands structured strategies. Several key frameworks have emerged to help organizations systematically identify, manage, and mitigate AI-specific risks. Below are four foundational frameworks shaping secure AI development and deployment today:

1. NIST AI Risk Management Framework (AI RMF)

Developed by the U.S. National Institute of Standards and Technology, this framework offers a comprehensive approach to managing AI-related risks. It is organized around four core functions:

- Govern: Establish oversight and accountability

- Map: Understand AI system context and risks

- Measure: Assess risk impacts and likelihood

- Manage: Prioritize and mitigate risks

NIST’s framework promotes transparency, fairness, and security across the full AI lifecycle.

2. Google’s Secure AI Framework (SAIF)

SAIF is a practical, end-to-end framework focused on securing AI systems from design through deployment. Key priorities include:

- Secure-by-design principles

- Strong access controls and user authentication

- Continuous monitoring and anomaly detection

- Ongoing threat modeling and risk assessments

SAIF encourages organizations to embed security into AI models and operational environments.

3. OWASP Top 10 for Large Language Models (LLMs)

OWASP list identifies the most critical security vulnerabilities specific to large language models. Notable risks include:

- Prompt injection attacks

- Data leakage and unintended memorization

- Model theft and inversion attacks

- Supply chain vulnerabilities

It serves as a practical checklist for developers, security teams, and auditors working with generative AI.

4. ENISA’s Framework for AI Cybersecurity Practices (FAICP)

Created by the European Union Agency for Cybersecurity, ENISA’s framework takes a lifecycle approach, dividing AI security into three main phases:

- Pre-development: Risk identification and governance setup

- Development: Secure coding, testing, and bias detection

- Post-deployment: Monitoring, auditing, and incident response

These four frameworks represent the current foundation of secure AI practices. Whether you are developing, deploying, or regulating AI systems, aligning with one or more of these models ensures a structured approach to AI security.

But depending on these frameworks is not enough alone, one have to follow some practices while building and maintaining AI solutions.

Best Practices for Building Secure AI Systems

Building secure AI systems isn’t just about defending against attacks – it’s about designing with resilience, privacy, and accountability in mind. Here are essential best practices, grounded in current AI security standards:

1. Secure the Data Pipeline

AI systems are only as trustworthy as the data they are built. Data protection must be enforced at every stage:

- Encrypt sensitive data in storage and transit to prevent leaks.

- Verify data sources to ensure authenticity and avoid data poisoning.

- Sanitize training data regularly to eliminate malicious or corrupted inputs.

- Use differential privacy techniques to reduce the risk of exposing personal information during inference.

2. Protect the AI Model Itself

Your AI model is a critical asset and a target. Secure it through:

- Adversarial training helps the model withstand malicious input manipulation.

- Regular vulnerability testing to catch weaknesses before attackers do.

- Model hardening techniques, such as output limiting, are used to reduce the risk of model theft or inversion.

3. Accurate Control Access

Limit who can interact with, modify, or extract information from your AI systems:

- Implement role-based access control to assign permissions based on job function.

- Use multi-factor authentication for all admin-level access.

- Log and monitor access attempts to detect and respond to unauthorized actions.

4. Implement Continuous Monitoring and Auditing

Security is not a one-time event – ongoing evaluation is key:

- Audit AI models and data flows regularly to detect anomalies or unauthorized changes.

- Use automated monitoring tools to catch behavioral drift, model performance issues, or suspicious inputs in real-time.

- Update models and infrastructure frequently to patch emerging vulnerabilities.

5. Integrate Security into the AI Development Lifecycle

Security should be built-in, not bolted on. To achieve this:

- Adopt a secure SDLC (Software Development Lifecycle) for AI projects to integrate security checks from design to deployment.

- Conduct threat modeling during design to mitigate AI-specific risks early.

- Rigorously test AI-generated outputs, especially in code-generation or decision-making use cases.

6. Ensure Transparency, Oversight, and Ethics

Secure AI also means accountable AI:

- Build explainable models where possible, to support audits and human oversight.

- Establish human-in-the-loop oversight for AI decisions that impact users or critical systems.

- Monitor bias, fairness, and compliance throughout the AI system’s lifecycle.

These best practices form a layered defense across the AI lifecycle, helping organizations move beyond reactive fixes to proactive and resilient systems. To stay ahead, teams should embed security into their development culture and, where needed, partner with experienced AI security experts to strengthen their security posture.

From Vision to Execution: Operationalizing AI Security with Markovate

At Markovate, we understand that securing AI systems requires more than technology – it demands comprehensive governance, custom protection, and active threat defense. Here’s how we help businesses confidently operationalize AI security:

1. Govern AI Usage

We implement robust AI security governance frameworks that establish clear policies, continuous training, and effective review processes. Thus, ensuring AI is used responsibly and compliantly across your organization.

2. Protect AI Environments

Our team creates secure AI setups customized to your needs. We use clear rules to protect your sensitive data, training inputs, and model results, keeping your AI systems safe from start to finish.

3. Defend Against AI Threats

Through continuous monitoring, real-time threat detection, and rigorous security testing, we help defend your AI systems from evolving risks such as adversarial attacks and deepfakes.

4. Accelerate AI Security

Leveraging advanced AI-powered security platforms and best-in-class partner technologies, such as AWS for cloud security, we accelerate governance and scalable protection as per the unique organization’s needs.

Our strong foundation in data engineering services ensures that your AI pipelines are secure and optimized for performance and reliability.

5. Transform Security Operations

We help your security team work smarter by using advanced AI tools and proven cybersecurity methods, like simulated attack testing (red teaming), to build strong, reliable systems that can handle future threats.

Partnering with Markovate means embedding security at the core of your AI initiatives. Hence, enabling your company to innovate confidently while staying protected against emerging AI threats.

What’s More: Look Ahead with AI Security & Build Trust

Concisely, AI threats are advancing fast, with attackers using AI itself to launch smarter and targeted attacks. To keep up, organizations must adopt AI-powered defenses that detect and respond to threats in real time.

Hence, AI security isn’t just a checkbox – it’s a continuous effort to protect data and build trust. By encrypting data, controlling access, and regularly updating models, businesses can overcome risks.

As regulations and standards emerge, companies that prioritize AI security will stand out as trusted innovators, thus further gaining customer loyalty and making the way for future growth.

FAQs

1. What are the main security threats to AI systems?

AI systems face unique threats like prompt injection (tricking the model with harmful input), data poisoning (feeding it bad training data), adversarial attacks (manipulating outputs), and model extraction (stealing the model or its knowledge). Defending against these requires strong data controls, monitoring, and secure model design.

2. What’s the difference between AI security and AI for security?

AI security is about protecting AI systems from threats like hacking, data poisoning, or model theft. It focuses on keeping the AI itself safe. AI for security is about using AI to make cybersecurity stronger, like detecting threats, analyzing risks, or responding to attacks faster. Both are important parts of modern cybersecurity, but they focus on different goals.